Tonight was protocol study night at my local hacker space where 5-10 people get together every Wednesday to dissect various networking protocols to see how they tick. We use a combination of things like Wireshark, The TCP/IP Guide(the bible), and the internet RFC archives to rip apart protocols and analyze live traffic in a group setting. Tonight, the subject was HTTP with a focus on FireSheep and the two mitigation tools BlackSheep and FireShepard.

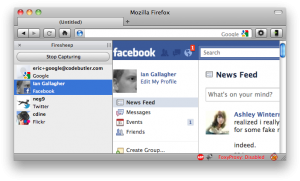

So by now everyone has heard of FireSheep. The concepts are nothing new but the author put everything into a pretty little browser plugin that makes it super easy for ANYONE to steal your Facebook, Twitter, etc credentials. Within a day or two of FireSheep being released, BlackSheep quickly followed. The premise of BlackSheep is that it is supposed to protect you from users of FireSheep and not allow said users to steal your credentials. This would be nice if it actually worked. I’m here to tell you that it does NOT work. FireSheep looks like this:

So what both BlackSheep and FireShepard do is attempt to perform a Denial of Service attack of sorts against the user running FireSheep. They spam FireSheep with fake sessions and credentials that show your name but won’t actually log you in. They show up in your FireSheep window and attempt to flood your buffer with too much information. The problem with this is that your working credentials are still there and can still be used. The attacker merely has to sort out all the fake credentials, find the real ones and click on them. FireShepard has even more failure in this regard. The spoofed HTTP headers have several fields in them that are always identical. My favorite was this one:

request+=”GET /packetSniffingKillsKittens HTTP/1.1\r\n”;

Even if FireShepard did work better than it does(which is basically not at all), the person running FireSheep could then easily filter out all the spoofed credentials by filtering it on that phrase.

BlackSheep, on the other hand, attempts to detect if the fake credentials are being used and is supposed to alert you if this is the case. In our testing however, we did not see any indication of this feature working properly.

If FireSheep isn’t scary enough, we observed some other scary behavior of facebook’s cookies. Most notably, we hit logout(explicitly) on the Facebook session and closed the browser and cleared/restarted FireSheep. When we reopened the browser and went to Facebook, we were not yet signed in on the Facebook page but when we switch BACK over to Firesheep, we were already logged in!! In other words, we merely had to go to Facebook’s page for a cookie to be transmitted to them that allowed a full login.

Not all bad news…

We did find one good solution to this mess. It’s not a sexy new tool but instead something that I hope a lot of us are using already. The EFF’s HTTPS Everywhere Firefox browser plugin put a stop to FireSheep picking off any of the credentials. We tested this with a Gmail, Facebook and Twitter. Not one of them showed up on FireSheep after enabling HTTPS Everywhere. I have been using this plugin since it was released and have been extremely satisfied with it. My only complaints are that I have had problems with the HTTPS side of certain sites not loading correctly for me. Most notably Wikipedia and Twitter(for about 24 hours). Other than that, it’s been flawless. It’s one of those plugins you can basically set and forget.

Using a VPN and avoiding open public wifi connections are also great ideas.

Follow this link for more information on FireSheep, FireShepard and BlackSheep.